kafkaintherun

who tells the birds where to fly?

Lens at Work:

A Day in Chinatown, Manhattan New York.

Recent Posts

-

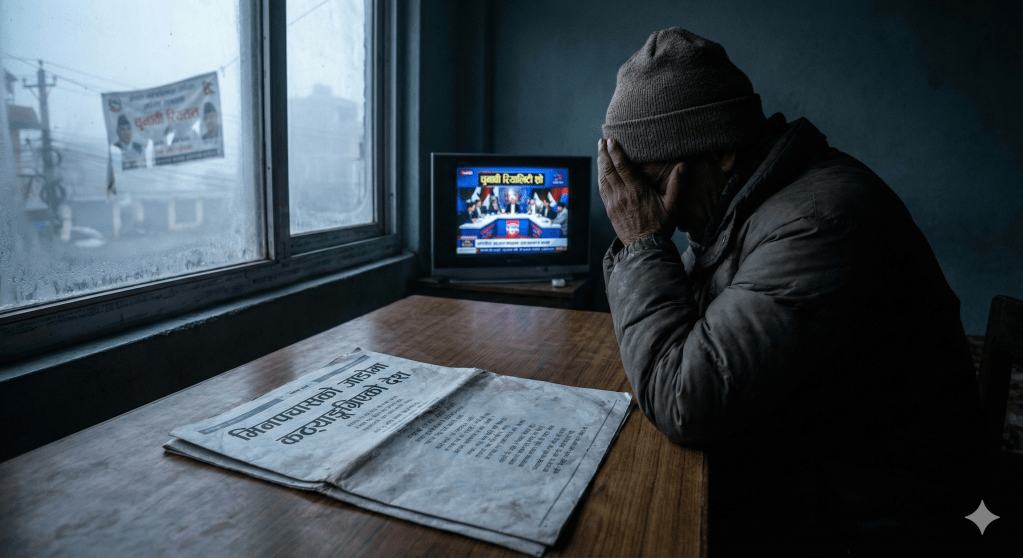

मिनपचासको जाडोमा कठ्यांग्रिएको देश

मिनपचासको जाडोमा कठ्याङ्ग्रिएको देश- यहाँ मौसम होइन, विवेक चिसिएको छ। अफसोस, हामीलाई त कठ्यांग्रिनुमै ‘निशाचर’ आनन्द छ।

-

Happy Birthday

I found you a year ago on this day, and I promised to wish you not virtually the next time around. I failed the geography of that promise, but not the truth of it. This is not just pixels on a screen. It is a heartbeat sent across the wire.

-

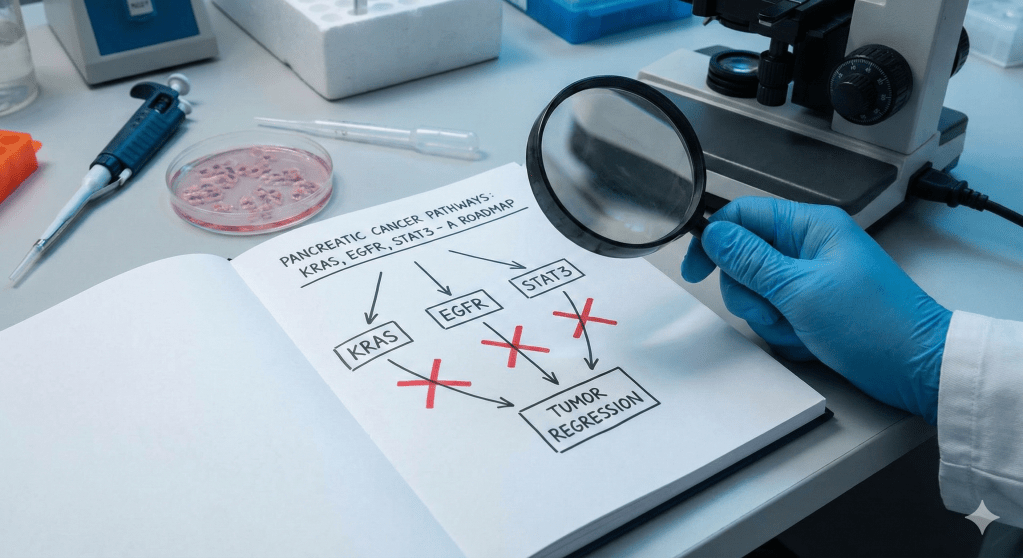

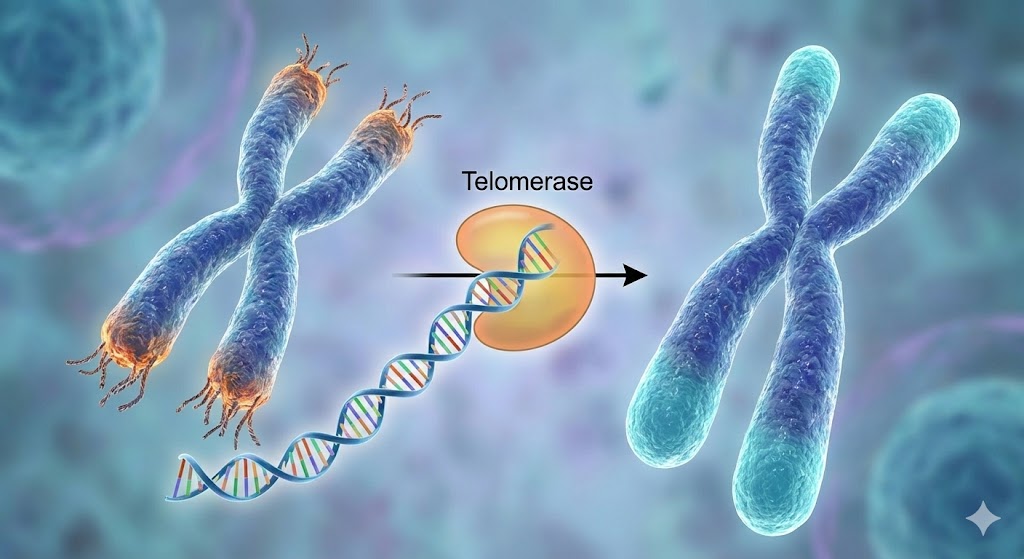

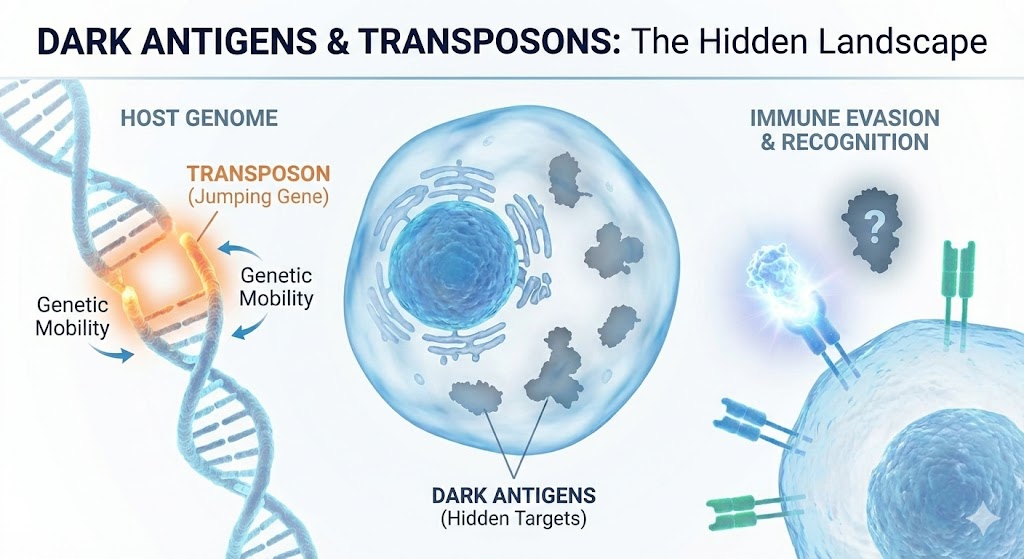

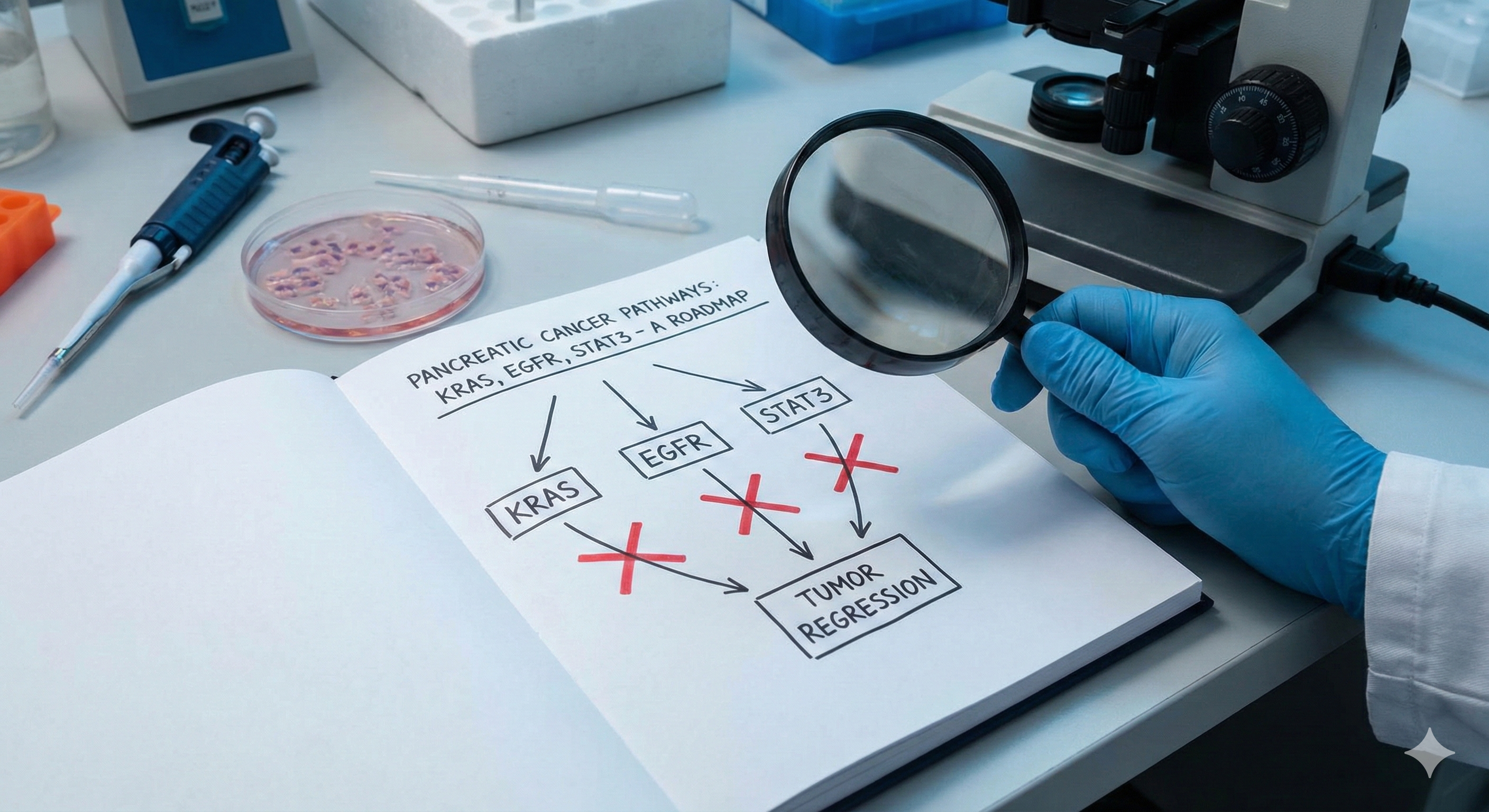

The Architecture of a Cure: Why Spain’s Pancreatic Cancer Breakthrough Is Both a Triumph and a Cautionary Tale

A major breakthrough from Spain’s CNIO claims to cure pancreatic cancer in mice. This analysis explains the ‘triple blockade’ science and why the road to human trials is still long and risky. Read the full reality check.

-

अब रमिता छ

इतिहासले सोध्नेछ, त्यो कालो सेप्टेम्बरपछि देशमा के फेरियो? थाहा छैन हामी के भन्नेछौँ, यहाँ जब सुसासन खोज्दै बितेका सपनाहरू निर्वाचनको कच्चा पदार्थ बनाएर नयाँ मसिहाहरूले बोक्न थालेका छन्। म आक्रोशित छैन, आक्रोश त ऊर्जा हो। म थाकेको छु, रियालिटी सो बनेको देशमा नयाँ भविष्यको विपनाले, अब रमिता छ।

-

Faith Before Hunger: When the Temple Birthed the Village and the Farm

Did faith hatch before the farm? Discover how 13,000-year-old ruins at Göbekli Tepe and Boncuklu Tarla are rewriting human history?

-

कांग्रेस: विरासतको विज्ञापन

यो पार्टी न इतिहासले बाँचेको हो न चुनावले, न बी.पी.को नामले। यो, लाज बाँकी रहेसम्म मात्र बाँच्छ। र जब लाज पनि सकिन्छ- तब पार्टी इतिहास होइन, स्मारक बन्छ।

-

अघोषित माया

दिनहरू चल्छन्, तर समय हिँड्दैन। चेतनाको एउटा छाल आउँछ, तिम्रो यादलाई किनारमा छोड्छ, र फेरि आफ्नै गहिराइतिर फर्कन्छ।

-

पृथ्वीनारायणसँगको जम्काभेट

पृथ्वीनारायण! तिमी आयौ? सिंहदरबारको गेटमा उभिएको सालिकले ठड्याएको औँला अब देश हाँक्ने बाटो होइन, युवाहरूलाई ‘एयरपोर्ट’ को बाटो देखाइरहेछ।

-

कामरेडसँगको पुनर्मिलन

कामरेड, तिमी फर्कियौ, बा–पुस्ताको आवाज लिएर। दाउरा सुरुवालको स्मृति अझै बाँकी थियो। महल, कुर्सी, र भाषणहरूले त्यो स्मृति मेटाए। हामी सुन्दै बुढा भयौँ, सपना नबोलिकन। हामी उभियौँ, नामसहित, अर्थविहीन।

-

सहरभित्रको अलिकति आश

तर आज, यो साँघुरो गल्लीमा उभिँदा, आफैँसँग झूट नबोल। तिमी यहाँ ल्याइएका होइनौ। तिमी आफैँ आइपुगेका हौ – थकित निर्णयहरूको बाटो हुँदै। शहरले तिमीलाई निलेको हैन। तिमीले आफैँ यसको लयसँग आफ्नो सास मिलाउन सिकेका हौ। त्यसैले त खोलाको सुसाइ अब तिमीलाई चर्को लाग्छ, र मौनताले तिमीलाई असहज बनाउँछ।

- Articles (25)

- English (26)

- English Poetry (8)

- History (13)

- Letters (4)

- Monologues (7)

- Musings (27)

- Nepali (52)

- Nepali literature (45)

- Nepali Poetry (46)

- Poetry (50)

- Ramblings (44)

- Science (8)

- Stories (2)

- February 2026 (2)

- January 2026 (8)

- December 2025 (6)

- November 2025 (6)

- October 2025 (6)

- September 2025 (2)

- August 2025 (7)

- July 2025 (3)

- June 2025 (4)

- May 2025 (4)

- April 2025 (27)

- March 2025 (6)

- December 2024 (1)

- October 2024 (1)

- September 2024 (1)

- March 2023 (1)

- February 2023 (1)

Akkad (5) Biology (5) hinduism (6) History (9) literature (57) love (7) love poems (6) Mesopotamia (6) muse (22) Musing (21) Nepal (31) Nepali (5) Nepali Poetry (6) nepali politics (19) Nepali Society (4) New York (12) nostalgia (4) Poetry (54) politics (4) random musing (29) romance (9) romantic (5) Science (5) Sumer (7) Writing (40) कविता (5) नेपाल (40) नेपाली कविता (20) नेपाली राजनीति (4) नेपाली साहित्य (5)

who tells the birds where to fly?

© 2025 all rights reserved. Designed with WordPress.